Elon Musk and Deepmind's pledge to never build killer AI makes a glaring omission, says Oxford academic

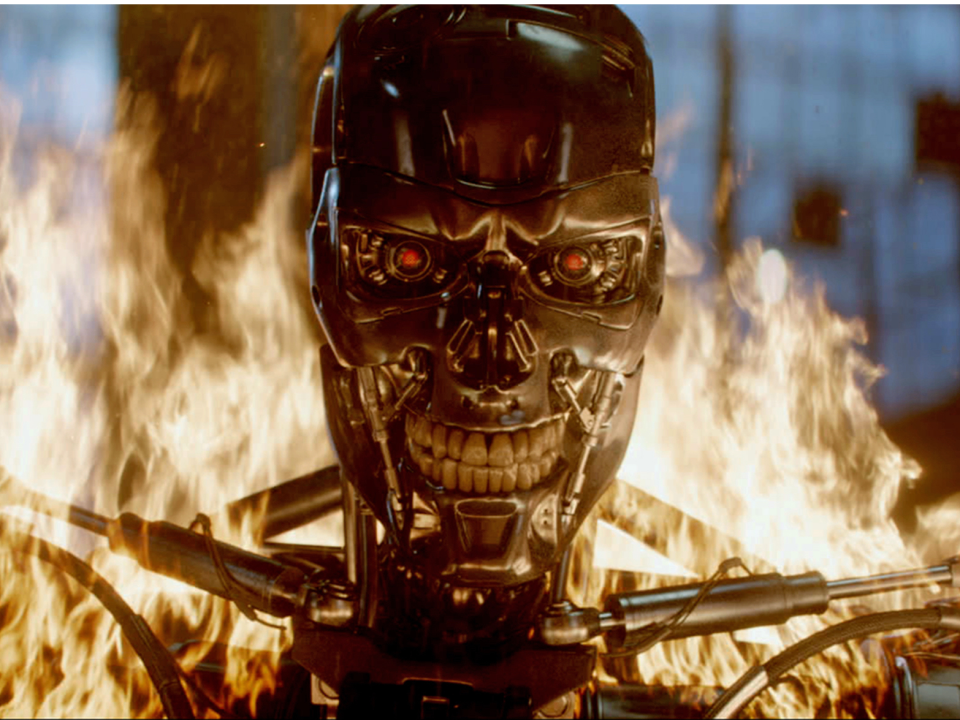

Melinda Sue Gordon/Paramount/"Terminator Genisys"

Tech leaders including Elon Musk and the cofounders of DeepMind signed a pledge last week to never develop "lethal autonomous weapons."

The letter argued that morally the decision to take a life should never be delegated to a machine, and automated AI weaponry could be disastrous.

Oxford academic Dr Mariarosaria Taddeo told Business Insider that while the pledge's intentions are good, it misses the real threat posed by AI in warfare.

She believes the deployment of AI in cybersecurity has flown under the radar — with potentially damaging consequences.

Promising never to make killer robots is a good thing.

That's what tech leaders, including Elon Musk and the cofounders of Google’s AI company Deepmind, did last week by signing a pledge at the International Joint Conference on Artificial Intelligence (IJCAI).

They stated that they would never develop "lethal autonomous weapons," citing two big reasons. Firstly, that it would be morally wrong to delegate the decision to kill a human being to a machine.

Secondly, they believe that the presence of an autonomous AI weapon could be "dangerously destabilizing for every country and individual."

Swearing off killer robots is missing the point

Business Insider spoke to Dr Mariarosaria Taddeo of the Oxford Internet Institute, who expressed some concerns about the pledge.

"It’s commendable, it’s a good initiative," she said. "But I think they go in with too simplistic an approach."

"It does not mention more imminent and impactful uses of AI in the context of international conflicts," Taddeo added.

"My worry is that by focussing just on the extreme case, the killer robots who are taking over the world and this sort of thing, they distract us. They distract the attention and distract the debate from more nuanced but yet fundamental aspects that need to be addressed."

Is AI on the battlefield less scary than in computers?

The US military makes a distinction between AI in motion (i.e. AI that is applied to a robot) and AI at rest (which is found in software).

Killer robots would fall into the category of AI in motion, and there are already states which deploy this hardware application of AI. The US navy received a self-piloting warship, and Israel has drones capable of identifying and attacking targets autonomously, although at the moment they require a human middle-man to give the go-ahead.

US Navy/John F. Williams

But AI at rest is what Dr Taddeo thinks needs more scrutiny — namely the use of AI for national cyber defense.

"Cyber conflicts are escalating in frequency, impact, and sophistication. States increasingly rely on them, and AI is a new capability that states are starting to use in this context," she said.

The "WannaCry" virus which attacked the UK's health service (the NHS) in 2017 has been linked to North Korea, and the UK and US governments collectively blamed Russia for the "NotPetya" ransomware attack, which took more than $1.2 billion.

Dr Taddeo said throwing AI defense systems into the mix could seriously escalate the nature of cyberwar.

"AI at rest is basically able to defend the systems in which it is deployed, but also to autonomously target and respond to an attack that comes from another machine. If you take this in the context of interstate conflict this can cause a lot of damage. Hopefully, it will not lead to the killings of human beings, but it might easily cause conflict escalations, serious damage to national critical infrastructure," she said.

There is no mention of this kind of AI in the IJCAI pledge, which Taddeo considers a glaring omission. She thinks that AI at rest garnering less media attention, meaning it has slipped under the radar with little discussion.

"AI is not just about about robotics, AI is also about the cyber, the non-physical. And this does not make it less problematic," Taddeo said.

AI systems at war with each other could pose a big problem

At the moment AI systems attacking each other don't cause physical damage, but Dr Taddeo warns that this could change.

"The more our societies rely on AI, the more it’s likely that attacks that occur between AI systems will have physical damage," she said. "In March of this year the US announced that Russia had been attacking national critical infrastructure for months. So suppose one can cause a national blackout, or tamper with an air control system."

"If we start having AI systems which can attack autonomously and defend autonomously, it’s easy that we find ourselves in an escalating dynamic for which we don’t have control," she added. In an article for Nature, Dr Taddeo warned of the risk of a "cyber arms race."

Chris McGrath/Getty Images

"While states are already deploying this aggressive AI, there is no regulation. There are no norms about state behaviour in cyberspace. And we don’t know where to begin."

In 2004, the United Nations assembled a group of experts to understand and define the principles of how states should behave in cyberspace, but in 2017 they failed to reach any kind of consensus.

She still thinks the agreement not to make killer robots is a good thing. "Do not get me wrong, it’s a nice gesture [but] it’s a gesture I don’t think it will have massive impact in terms of policy making and regulations. And they are addressing a risk and there’s nothing wrong with that," she said. "But the problem is bigger."

NOW WATCH: Everything wrong with Android

See Also:

Yahoo Finance

Yahoo Finance